The Road to LLM: What Does It Mean to Embed Words? [Day 4]

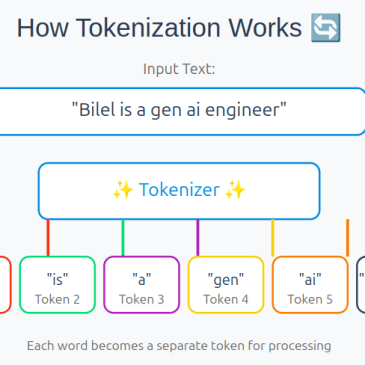

Hello everyone! We explored tokenizers—tools that divide text into units called tokens. In today’s post, we’ll dive into the concept of word embedding, using a machine learning model called Word2Vec, which focuses on word-level embeddings. What Is Word Embedding? Simply put, it looks like this: ID Word 1 cat 2 dog 3 bird 4 fox 5 tiger … Lire la suite The Road to LLM: What Does It Mean to Embed Words? [Day 4]

![The Road to LLM: What Does It Mean to Embed Words? [Day 4]](https://i0.wp.com/kbilel.com/wp-content/uploads/2025/05/image.png?resize=365%2C365&ssl=1)