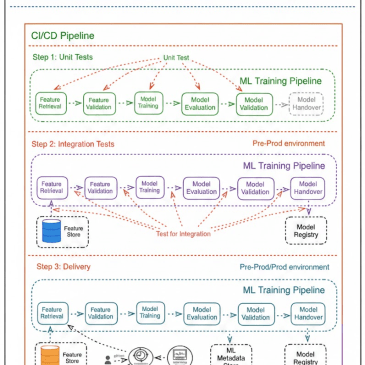

CI/CD for Machine Learning: It’s Not Just « Code + Data »

Hey everyone! It’s been a minute since my last post. Work has been absolutely crazy lately had a few tight deadlines that ate up all my free time. But I finally came up for air, and I wanted to document something I’ve been wrapping my head around recently. As an ai engineer, I thought I … Lire la suite CI/CD for Machine Learning: It’s Not Just « Code + Data »

![The Road to LLM: What Does It Mean to Embed Words? [Day 4]](https://i0.wp.com/kbilel.com/wp-content/uploads/2025/05/image.png?resize=365%2C365&ssl=1)

![The road to LLM ~What is machine learning anyway? With an explanation of this project~[Day 1]](https://i0.wp.com/kbilel.com/wp-content/uploads/2024/08/image1-1.png?resize=365%2C365&ssl=1)