Hello everyone! 👋

In our previous article, we explored Natural Language Processing (NLP) and how computers need to convert text into numbers (distributed representations) to understand human language 🗣️ ➡️ 🔢

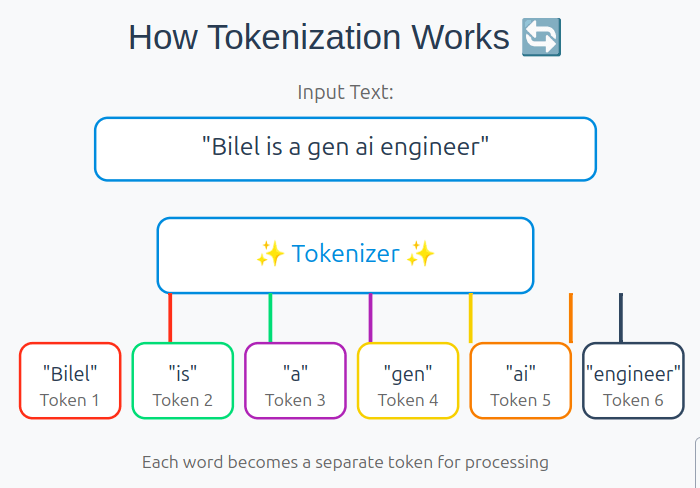

Today, we’ll examine the crucial step that makes this conversion possible: tokenization! ✨ A tokenizer breaks down text into smaller pieces that can be converted into those numerical representations we discussed. Let’s dive into what tokenizers are and how they work with practical examples in English and French 🇬🇧 🇫🇷

What is a Tokenizer? Let’s Organize Related Terms! 🤔

In machine learning models, inputs (whether images or text) must be converted to numbers. For text, we use vectors, and this process of converting text to numbers (vectors) is called distributed representation.

Here’s where an important question arises: « At what unit should we convert text into numbers? » For instance, when dealing with multiple sentences, should we create distributed representations up to each period as one unit, or word by word?

Actually, each machine learning model handling natural language processing deals with different units. These units are called tokens – pieces of text broken down according to certain rules.

There are three types of tokens:

- Word-level Tokens

- Divides text into individual words

- Easy for languages like English where words are naturally separated

- Harder for languages without clear word boundaries

- Example: « Today is nice weather » → [« Today », « is », « nice », « weather »]

- Character-level Tokens

- Splits text character by character

- Example: « Hello » → [« H », « e », « l », « l », « o »]

- Subword-level Tokens

- Further divides word units

- These divisions are called subwords

- Example: « playing » → [« play », « ##ing »]

- The ## indicates this subword attaches to a previous one

This process of breaking text into tokens is called tokenization, and the tool that performs this is called a tokenizer.

Let’s Try Using Tokenizers! 💻

Let’s experiment with tokenizers using Python code. We’ll use Google Colab (CPU runtime) for our environment.

import nltk

from nltk.tokenize import word_tokenize

# Only needed for first execution

nltk.download('punkt')

text = "My cat is pretty cute!"

tokens = word_tokenize(text)

print(tokens)

# Output: ['My', 'cat', 'is', 'pretty', 'cute', '!']

English Example:

Let’s Try with Other Languages:

First, install the necessary module:

pip install spacy

python -m spacy download fr_core_news_sm

Then try tokenization:

import spacy

# Initialize tokenizer

nlp = spacy.load('fr_core_news_sm')

# Split text into tokens

text = "Le chat est vraiment mignon. C'est indiscutable. Tous les chats sont mignons."

tokens = [token.text for token in nlp(text)]

print(tokens)

#Output: ['Le', 'chat', 'est', 'vraiment', 'mignon', '.', "C'", 'est', 'indiscutable', '.', 'Tous', 'les', 'chats', 'sont', 'mignons', '.']

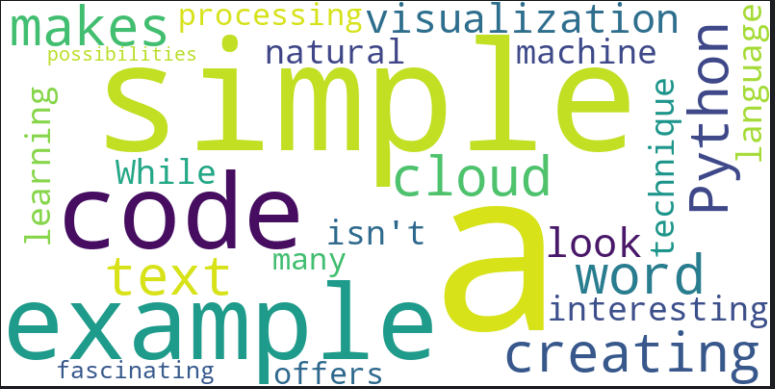

Let’s Visualize with WordCloud! 📊

First, let’s install the required font:

apt-get -y install fonts-ipafont-gothic

Then run this code:

import spacy

from wordcloud import WordCloud

import matplotlib.pyplot as plt

# Initialize tokenizer

nlp = spacy.load('fr_core_news_sm')

input_text_data = """

This is a simple example code for creating a word cloud.

Python makes text visualization look interesting.

While this isn't a machine learning technique,

natural language processing offers many fascinating possibilities.

"""

# Define stopwords (words to exclude)

stopwords = ['the', 'is', 'and', 'to', 'of', 'in', 'for', 'this', 'that']

# Generate WordCloud

wordcloud = WordCloud(

width=800,

height=400,

background_color="white",

stopwords=stopwords

).generate(input_text_data)

# Drawing settings

plt.figure(figsize=(10, 5))

plt.imshow(wordcloud, interpolation="bilinear")

plt.axis("off")

# Save the image instead of showing it

plt.savefig('wordcloud.png', bbox_inches='tight', pad_inches=0)

plt.close()

print("Word cloud has been saved as 'wordcloud.png'")

In Conclusion ✨

Today we explored tokenizers and saw practical code examples. Think of tokenization as the crucial first step in natural language processing tasks, where text is divided into meaningful units according to specific rules.

Next ticket, we’ll use what we learned about tokenizers to run a machine learning model! Stay tuned! 👋